Introduction: Why Operational Data Hubs Fail — and How to Do It Right

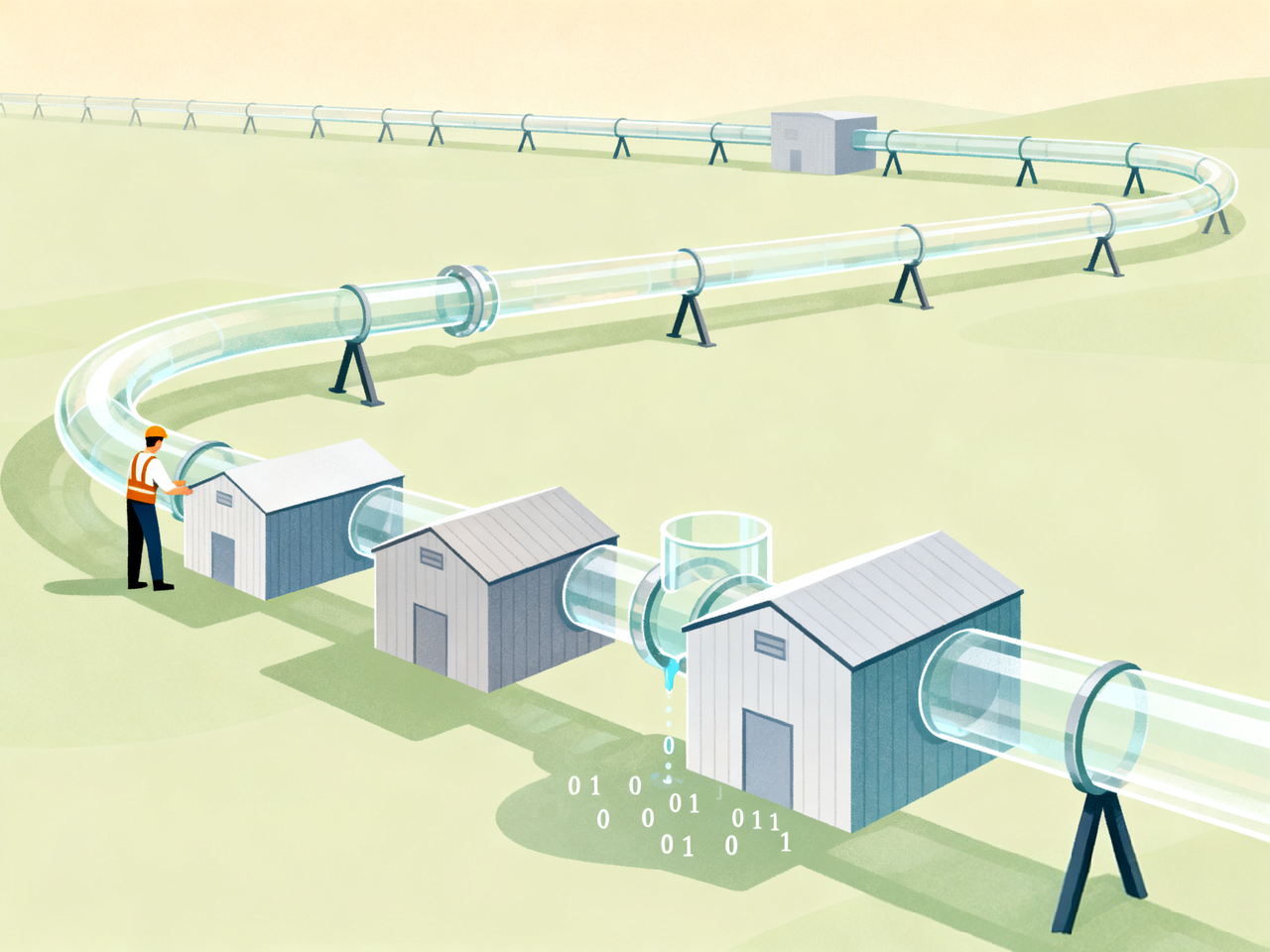

The idea of an operational data hub is compelling: unify data from fragmented systems, stream it in real time, and serve it instantly to APIs, dashboards, and downstream services.

But turning that vision into reality can be challenging. Many teams jump into implementation without fully understanding the architectural trade-offs, integration limitations, or system behaviors — and the result is an underperforming or fragile system.

In this article, we’ll walk through common pitfalls in operational data hub implementation, and show how to avoid them by applying proven best practices — many of which we’ve seen in real-world TapData deployments.

Pitfall 1: Confusing a Data Hub with a Data Warehouse

Why it’s a problem:

-

ODHs are designed for live, low-latency sync, not long-term storage

-

Treating it like a warehouse leads to stale data, performance issues, and misuse

How to avoid it:

-

Scope your data hub to operational data only: what changes frequently and needs to be exposed quickly

-

Offload long-term historical queries to your existing DWH or lakehouse

Pitfall 2: Ignoring Schema Drift and Evolution

Why it’s a problem:

-

Static schema mappings break as source systems evolve

-

Downstream systems may reject records or produce wrong results

How to avoid it:

-

Use tools like TapData that support dynamic schema discovery and field mapping

-

Set up schema versioning and alerts to detect breaking changes

-

Design materialized views to be schema-tolerant

Pitfall 3: Treating All Sources the Same

Why it’s a problem:

-

Applying one ingestion pattern to all sources leads to high latency or inconsistency

-

Legacy systems may not expose change data in usable formats

How to avoid it:

-

Choose ingestion strategies per source: log-based CDC, timestamp polling, triggers, etc.

-

Leverage a platform like TapData that abstracts source-specific CDC handling with a unified interface

Pitfall 4: No Strategy for Backpressure and Latency Spikes

Why it’s a problem:

-

Delayed data invalidates dashboards and APIs

-

Unmanaged queue buildup strains system resources

How to avoid it:

-

Implement rate control and batch flush thresholds to manage volume

-

Use monitoring tools to detect latency spikes and apply auto-recovery

-

Design retry policies and data deduplication safeguards

Pitfall 5: Underestimating Operational Monitoring and Governance

Why it’s a problem:

-

Data becomes untrustworthy without observability

-

Stakeholders lose confidence in dashboards and APIs

How to avoid it:

-

Use platforms that offer real-time monitoring dashboards, alerting, and task logs

-

Tag pipelines by business domain for lineage tracking

-

Log transformation logic and sync timestamps for auditability

Pitfall 6: Building Too Much Custom Code

Why it’s a problem:

-

High developer effort

-

Poor reusability

-

Difficult to onboard new team members or troubleshoot issues

How to avoid it:

-

Adopt low-code platforms like TapData that offer:

-

Visual pipeline configuration

-

Built-in CDC connectors

-

Reusable materialized views

-

-

Focus your development on business logic, not plumbing

Summary: Build Your Data Hub for the Long Run

Ready to implement your operational data hub the right way? → See how TapData helps