Data Integration Definition and Key Concepts for 2025

Data integration unifies information from multiple sources into a coherent format, enabling businesses to meet modern data consumption requirements. By consolidating data, you can ensure accuracy and consistency, which are critical for leveraging data analytics tools effectively. In 2025, the growing complexity of enterprise data integration highlights its strategic importance. Organizations increasingly rely on advanced data integration platforms and technologies to support real-time decision-making and customer data integration. According to the 2024 Data + AI Integration Trends report, addressing data integration requirements is essential for staying competitive in a rapidly evolving landscape.

Data Integration Definition and Core Concepts

What Is Data Integration?

Data integration is the process of combining data from multiple sources into a unified and accessible format. This process ensures that data is accurate, consistent, and ready for analysis. By centralizing information, you can streamline workflows, reduce errors, and improve the quality of insights derived from your data. The data integration process typically involves extracting data from various sources, transforming it into a usable format, and loading it into a target system. This approach is essential for creating a single source of truth, which is critical for business intelligence and decision-making.

Several principles define the data integration process. These include atomicity, where each process focuses on a single function, and auditability, which ensures a clear trail for tracking data changes. Processes must also support rerun capabilities and automatic recovery to maintain data integrity. These principles enhance the reliability and flexibility of data integration services, making them indispensable for modern businesses.

Core Purpose of Data Integration

The primary goal of data integration is to make data actionable and insightful. By reconciling differences between datasets and correcting errors, you can ensure data accuracy and completeness. This process reveals patterns and insights that might remain hidden when data is siloed. For example, integrating customer data from various touchpoints can help you understand customer behavior and preferences more effectively.

Modern data integration services also play a crucial role in business intelligence. They enable you to make agile improvements to products and services by providing a comprehensive view of operations. Whether you're in sales, marketing, or operations, data integration capabilities allow you to align your strategies with your business objectives, enhancing decision-making and operational efficiency.

Types of Data Integration

Manual Integration

Manual integration involves human intervention to consolidate data from different sources. You might use this approach for small-scale projects or when dealing with limited datasets. While it offers flexibility, manual integration can be time-consuming and prone to errors, making it less suitable for large-scale or real-time applications.

Middleware Integration

Middleware integration uses software to connect different systems and facilitate data exchange. This approach is ideal for organizations that need to move live data between applications in real time. Middleware acts as a bridge, orchestrating the data flow and ensuring compatibility between systems. It is particularly useful for application integration, where immediate data updates are crucial.

Application-Based Integration

Application-based integration focuses on combining data from various sources into a single dataset. This method often involves specialized tools or platforms designed to handle big data integration. By transforming and loading data into a centralized system, you can enhance its usability and analytics capabilities. This approach is well-suited for businesses aiming to leverage data for strategic decision-making.

Importance of Data Integration in Business Intelligence

Enhancing Decision-Making with Unified Data

Unified data empowers you to make informed decisions by providing a comprehensive view of your business operations. When data resides in silos, it limits your ability to strategize effectively. Centralizing data in a single repository ensures accessibility across all organizational levels, enabling faster and more accurate decision-making.

Disconnected data across departments restricts strategic planning.

Centralized data enhances accessibility, improving decision-making processes.

Unified access requires robust security measures to ensure safe data flow.

For example, a mid-sized software company improved its decision-making by implementing a Master Data Management platform. This allowed teams to access accurate data independently, speeding up processes. Additionally, they enhanced data literacy through educational initiatives, enabling employees to interpret and use data effectively. By adopting a strong data integration strategy, you can achieve similar results and align your decisions with business objectives.

Tip: Establish clear data ownership roles and secure access controls to maximize the benefits of unified data.

Improving Data Accuracy and Consistency

Data integration ensures accuracy and consistency by reconciling discrepancies across multiple sources. Seamless integration connects data systems, reducing errors and creating a reliable dataset. Automated validation processes identify issues early, preventing inaccurate data from affecting your operations.

For instance, real-time integration delivers up-to-date information, enabling you to make timely decisions. This approach not only improves decision-making but also ensures that your data-driven strategies are built on a solid foundation. A well-executed data integration strategy eliminates redundancies and enhances the quality of insights, giving you a competitive edge in today’s data-driven landscape.

Supporting Real-Time Analytics

Real-time analytics relies on effective data integration to process and analyze information as it is ingested. This capability allows you to respond to market changes and customer needs instantly. For example, financial institutions use real-time analytics to detect potential fraud during transactions, triggering automated responses to protect customers.

Top IT service providers also leverage real-time analytics to monitor performance and prevent issues before they arise. By integrating real-time data into your business intelligence framework, you can enhance operational efficiency and make proactive decisions. This approach ensures that your business remains agile and competitive in a rapidly evolving market.

Note: Real-time data integration complements traditional ETL processes, offering scalability and high performance for faster data delivery.

Facilitating Cross-Department Collaboration

Data integration plays a pivotal role in fostering collaboration between departments by creating a unified view of organizational data. When teams operate with disconnected systems, communication gaps and inefficiencies often arise. A centralized approach to data ensures that every department can access accurate and consistent information, enabling seamless coordination and alignment of goals.

For example, an integrated customer relationship management (CRM) system can bridge the gap between IT, sales, marketing, and customer support teams. By sharing a unified view of customer data, these departments can work together to enhance customer satisfaction. In one case, this approach improved customer satisfaction by 20% and increased sales team efficiency by 30%. Similarly, the development of a mobile banking app demonstrated the power of cross-department collaboration. IT, product development, customer service, and compliance teams worked together to achieve a 50% increase in mobile banking users while reducing customer service calls by 25%.

A unified view of data also supports strategic initiatives like enterprise resource planning (ERP) systems. These systems integrate data from IT, finance, supply chain management, and human resources departments. This integration reduces operational costs and improves inventory management accuracy. In one instance, an ERP implementation led to a 15% reduction in costs and a 40% improvement in inventory accuracy.

By breaking down silos, data integration empowers departments to share insights and resources effectively. This collaboration not only enhances operational efficiency but also drives innovation. When every team has access to the same reliable data, your organization can respond to challenges and opportunities with agility and precision.

Tip: Encourage regular cross-department meetings to discuss data-driven insights and align strategies. This practice ensures that all teams benefit from the unified view provided by data integration.

Key Techniques and Tools for Data Integration

ETL (Extract, Transform, Load)

ETL is one of the most widely used data integration techniques. It involves extracting data from various sources, transforming it into a usable format, and loading it into a target system. This method is ideal for creating a structured data pipeline that ensures data consistency and accuracy. You can use ETL to handle complex transformations, making it suitable for smaller datasets with intricate requirements.

Traditional ETL systems rely on batch processing, which processes data in chunks rather than in real time. While this approach works well for relational databases, it may not meet the demands of modern data integration architecture. However, modern ETL tools now support real-time streaming and high-speed batch processing, offering greater flexibility for integrating data from diverse sources.

Parameter | ETL | ELT |

|---|---|---|

Data is transformed at the staging area before loading into the target system. | Data is extracted and loaded into the target system directly. Transformation occurs in the target. | |

Speed | Time-intensive due to pre-loading transformations. | Faster, as data is loaded directly and transformed in parallel. |

Data Volume | Best for small datasets with complex transformations. | Ideal for large datasets requiring speed and efficiency. |

ELT (Extract, Load, Transform)

ELT is a modern alternative to ETL that reverses the transformation and loading steps. In this method, you extract data from sources, load it directly into the target system, and then perform transformations within the target environment. This approach is faster and more efficient, especially for large datasets.

By leveraging the power of modern cloud-based systems, ELT enables you to process data in parallel, reducing the time required for integration. It also supports scalability, making it a preferred choice for organizations dealing with high data volumes. ELT aligns well with modern data integration methods, offering speed and flexibility for building robust data pipelines.

Data Virtualization

Data virtualization is a cutting-edge data integration technique that allows you to access and integrate data from multiple sources without creating physical copies. This method abstracts data from its original location, enabling you to work with distributed datasets seamlessly.

Using data virtualization, you can modernize your data integration architecture by incorporating cloud applications without disrupting existing operations. This technique reduces resource requirements and enhances data accessibility, allowing you to create user-defined objects from distributed data. Additionally, it provides real-time global data access, enabling you to source information regardless of its physical location.

For example, if your organization uses both on-premises and cloud-based systems, data virtualization can unify these environments into a single, cohesive data pipeline. This approach simplifies integration processes and ensures that your team can access accurate, up-to-date information whenever needed.

Tip: Consider data virtualization if you want to reduce infrastructure costs while improving data accessibility and operational efficiency.

Real-Time Data Integration

Real-time data integration enables you to process and analyze data as it is generated, ensuring immediate insights and faster decision-making. This approach is essential for businesses that need to respond quickly to dynamic market conditions or customer demands. Unlike traditional batch processing, real-time integration minimizes latency, allowing you to access up-to-date information without delays.

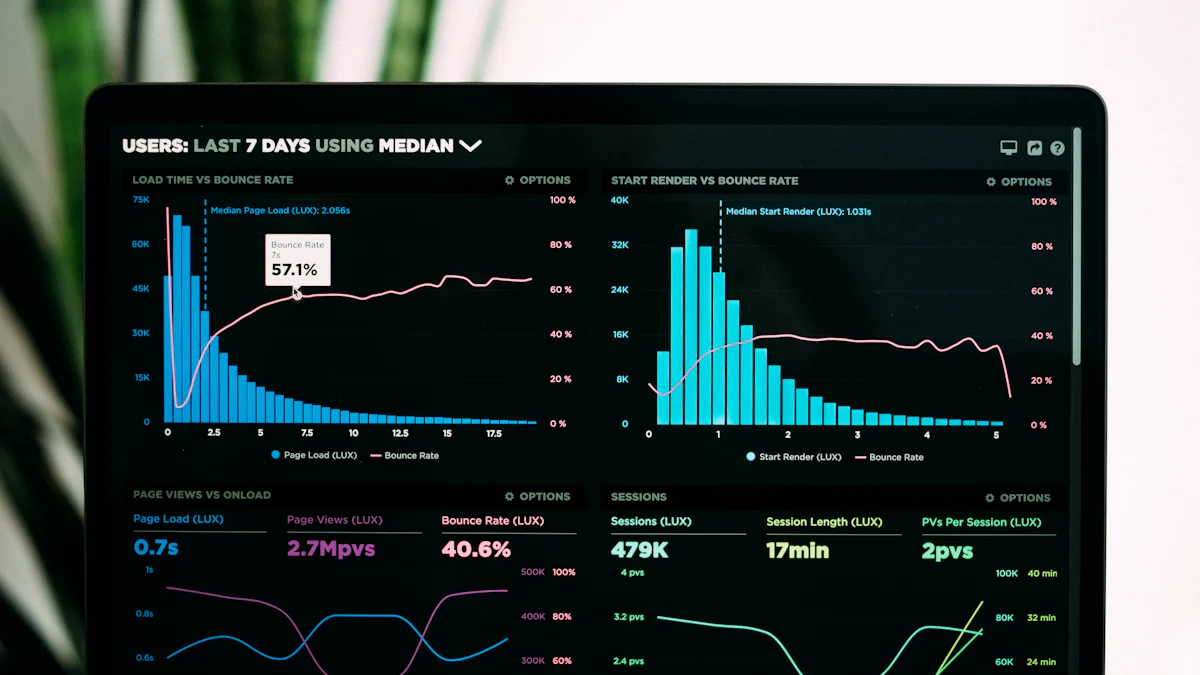

The benefits of real-time data integration include improved data quality, better decision-making, and enhanced productivity. However, challenges such as managing high data volumes, ensuring accuracy, and addressing latency issues can arise. The table below highlights these benefits and challenges:

Benefits | Challenges |

|---|---|

Improved Data Quality | Data Volume and Velocity |

Better Decision Making | Data Quality and Accuracy |

Boost Productivity | Latency and Performance |

Cost Efficient |

Technologies like Apache Kafka and Spark Streaming play a pivotal role in real-time data integration. These tools handle massive data flows with minimal latency, ensuring seamless data transmission. Confluent, built on Kafka, reduces processing times by up to 70%, making it a popular choice for businesses. Additionally, advancements such as Change Data Capture (CDC) and predictive data integration allow you to forecast trends and optimize operations. By adopting real-time integration, you can modernize your data integration architecture and stay competitive in a fast-paced environment.

Tools for Data Integration

Cloud-Based Solutions

Cloud-based data integration tools offer scalability, flexibility, and cost efficiency. These solutions allow you to integrate data from various sources, including on-premises systems and cloud applications, without significant infrastructure investments. Popular tools include:

TapData: Automates data pipeline creation for real-time and seamless integration.

Microsoft Azure Data Factory: Ideal for organizations using the Microsoft Azure platform.

Informatica Cloud: Known for advanced data quality and governance features.

Dell Boomi: Offers a low-code development environment for faster deployment.

These tools simplify the integration process, enabling you to focus on deriving actionable insights rather than managing infrastructure.

AI-Driven Platforms

AI-driven platforms enhance data integration by automating complex processes and improving accuracy. These platforms use machine learning to identify patterns, predict trends, and enrich data. Examples include:

Adverity: Specializes in marketing analytics and predictive modeling.

IBM InfoSphere DataStage: Excels in time-sensitive data management.

SnapLogic: Features a visual interface and a wide range of connectors.

Oracle Data Integrator (ODI): Optimized for ETL processes in Oracle environments.

AI-driven platforms also support intelligent data pipelines, reducing manual intervention and ensuring efficient operations. By leveraging these tools, you can streamline your data integration efforts and unlock the full potential of your data.

Trends Shaping Data Integration in 2025

AI and Machine Learning in Data Integration

AI and machine learning are revolutionizing how you approach data integration. These technologies automate complex processes like data extraction, transformation, and integration, saving time and resources. They adapt proactively to changing data environments, ensuring your systems remain efficient and scalable. For instance, AI can monitor data sources in real-time, triggering integration workflows when changes occur. This capability enhances consistency and accuracy while reducing manual intervention.

Machine learning shifts your focus from managing data to leveraging it for anticipatory analytics. By analyzing large volumes of real-time data, it identifies patterns and predicts outcomes, enabling you to make informed decisions quickly. AI also automates metadata generation and management, giving you greater visibility and control over your data assets. These advancements make AI and machine learning indispensable for modern data integration strategies.

Cloud-Native Integration Solutions

Cloud-native integration solutions offer unparalleled flexibility and scalability for your business. These tools dynamically allocate resources to handle fluctuating workloads, ensuring seamless performance even during peak demand. Their self-healing architecture supports continuous integration and delivery, enabling you to adopt best practices effortlessly. Faster feature delivery becomes possible, giving you a competitive edge in the market.

By leveraging cloud-native solutions, you can respond rapidly to evolving market conditions. These platforms facilitate real-time data streaming, allowing you to deliver value to users more quickly. They also enhance customer experiences by seamlessly connecting app functionality with user needs. For example, businesses using cloud-native tools often report improved scalability and faster time-to-market, critical factors for staying competitive in 2025.

Emphasis on Data Governance and Security

Data governance and security are becoming central to your data integration strategy. Governance establishes clear rules for handling data, ensuring compliance with regulations and protecting sensitive information. It also tracks data lineage, helping you identify vulnerabilities and maintain data quality. Strong governance reduces errors that could lead to security risks, fostering trust in your data.

Security operationalizes governance policies by enforcing access controls and monitoring for breaches. For example, a well-defined governance plan includes protocols for responding to security incidents, minimizing potential damage. By prioritizing governance, you can streamline data management and clarify roles and responsibilities within your organization. This approach not only strengthens compliance but also builds trust with stakeholders, ensuring your data integration efforts remain robust and secure.

Tip: Combine governance with real-time data streaming to enhance both security and operational efficiency.

Real-Time and Streaming Data Integration

Real-time and streaming data integration have become essential for businesses aiming to stay agile in a competitive environment. This approach allows you to process data as it is generated, enabling immediate insights and faster decision-making. Organizations no longer view real-time integration as a luxury. It has become a necessity for adapting to market changes and evolving consumer behavior.

Technologies like Change Data Capture (CDC) and data streaming play a pivotal role in this transformation. CDC tracks changes in source systems and updates target systems in real time, ensuring data consistency. Data streaming, on the other hand, facilitates continuous data ingestion from multiple sources, allowing you to analyze and act on information without delays. These technologies empower businesses to evolve their strategies dynamically, responding swiftly to new opportunities or challenges.

For example, a retail company can use real-time integration to monitor inventory levels and adjust pricing based on demand fluctuations. Similarly, financial institutions rely on streaming data to detect fraudulent activities during transactions, safeguarding customer trust. By integrating real-time data into your operations, you can enhance agility, improve decision-making, and maintain a competitive edge.

Tip: Implement robust monitoring tools to ensure the accuracy and reliability of your real-time data pipelines.

Low-Code and No-Code Integration Platforms

Low-code and no-code platforms are revolutionizing how you approach data integration. These platforms simplify the process by providing intuitive interfaces and pre-built connectors, enabling you to integrate applications, systems, and data without extensive coding knowledge. This approach significantly reduces integration time, allowing you to connect systems in minutes rather than hours or days.

The advantages of low-code platforms extend beyond speed. They also enhance risk management by enabling you to adapt quickly to regulatory changes while ensuring data privacy and security. For instance, a healthcare organization can use a low-code platform to comply with new data protection laws without disrupting its operations. These platforms also empower non-technical users to participate in integration tasks, fostering collaboration across departments.

By adopting low-code or no-code solutions, you can streamline your data integration efforts, reduce costs, and improve operational efficiency. These platforms are particularly beneficial for small and medium-sized businesses that lack extensive IT resources but still require robust integration capabilities.

Note: Choose a platform that aligns with your organization's specific needs and scalability requirements.

Challenges and Best Practices for Data Integration

Common Challenges

Data Silos

Data silos occur when departments or systems store information in isolated environments, making it difficult to create a unified view. This fragmentation limits your ability to analyze data holistically and hinders collaboration across teams. To address this, you can invest in integration solutions that consolidate data into a centralized repository, such as a cloud-based data warehouse or data lake. These tools streamline data ingestion and eliminate silos, enabling seamless access to information.

Data Quality Issues

Inaccurate or inconsistent data can undermine decision-making and reduce the effectiveness of your analytics. Poor data quality often arises from errors during data ingestion or discrepancies between sources. Implementing automated validation processes and robust cleaning mechanisms ensures that your data remains reliable and actionable. By prioritizing data quality, you can build a strong foundation for your data integration efforts.

Scalability and Performance Concerns

As your organization grows, your data integration solutions must scale to handle increasing volumes of data. Many businesses struggle with performance bottlenecks and high costs when expanding their integration capabilities. Designing a scalable architecture and leveraging cloud-native tools can help you overcome these challenges. These solutions dynamically allocate resources, ensuring consistent performance even during peak demand.

Best Practices

Defining Clear Objectives

Establishing clear goals is essential for successful data integration projects. Begin by identifying the specific outcomes you want to achieve, such as improving data accessibility or enabling real-time analytics. Clear objectives provide direction and help you measure the success of your integration efforts.

Selecting the Right Tools

Choosing the right tools is critical for effective data integration. Evaluate platforms based on their scalability, support for multiple data sources, and ease of use. Conduct a pilot test to assess the tool's performance with your data. Additionally, prioritize solutions that offer automation features to streamline data ingestion and metadata management.

Ensuring Data Governance and Compliance

Data governance ensures that your integration processes align with regulatory requirements and protect sensitive information. Start by conducting a data inventory and risk assessment to identify vulnerabilities. Implement technical controls, such as access restrictions, to safeguard your data. Regular audits and training programs foster a culture of compliance within your organization.

Regular Monitoring and Optimization

Continuous monitoring helps you identify and resolve issues in your data integration processes. Track performance metrics and conduct regular tests to ensure smooth data ingestion and transfer. Optimize your integration strategy by scaling resources as needed and updating tools to meet evolving business requirements. This proactive approach enhances efficiency and minimizes disruptions.

Tip: Regularly review your data integration best practices to adapt to new technologies and business needs.

Data integration unifies disparate data sources, enabling businesses to derive actionable insights and maintain a competitive edge. Innovations like generative integration and automation streamline processes, fostering adaptability and efficiency. These advancements empower organizations to enhance customer experiences and innovate effectively. By adopting best practices, you can ensure reliable data governance, make informed decisions, and respond swiftly to market changes. Embracing these strategies prepares your business for future challenges while leveraging data to drive strategic growth.

FAQ

What is the difference between ETL and ELT in data integration?

ETL transforms data before loading it into the target system, while ELT loads raw data first and performs transformations within the target system. ETL suits smaller datasets with complex transformations. ELT works better for large datasets, offering faster processing and scalability.

Tip: Choose ETL for legacy systems and ELT for modern cloud-based platforms.

How does data virtualization improve integration processes?

Data virtualization allows you to access and integrate data without creating physical copies. It reduces infrastructure costs, enhances data accessibility, and simplifies integration across on-premises and cloud systems. This technique ensures seamless workflows and real-time access to distributed datasets.

Note: Use data virtualization to modernize your architecture without disrupting existing operations.

Why is real-time data integration important for businesses?

Real-time data integration processes data as it is generated, enabling immediate insights and faster decision-making. It helps businesses respond quickly to market changes, detect fraud, and improve operational efficiency. This approach ensures agility and competitiveness in dynamic environments.

Example: Retailers use real-time integration to adjust inventory and pricing based on demand.

What are the key challenges in data integration?

Common challenges include data silos, poor data quality, and scalability issues. Silos hinder collaboration, while inconsistent data affects decision-making. Scalability concerns arise as data volumes grow. Address these challenges by investing in centralized repositories, automated validation, and cloud-native tools.

Tip: Regularly monitor and optimize your integration processes to overcome these challenges.

How can low-code platforms simplify data integration?

Low-code platforms provide intuitive interfaces and pre-built connectors, enabling you to integrate systems without extensive coding. They reduce integration time, improve collaboration, and adapt quickly to regulatory changes. These platforms empower non-technical users to participate in integration tasks.

Emoji: 🚀 Accelerate your integration efforts with low-code solutions for faster results.

See Also

Understanding Database Integration: Advantages, Tips, And Functionality

Enhancing Business Productivity Through Effective Data Integration

Navigating Data Integration Tools: An In-Depth Resource

Evaluating Data Integration Tools: Functions, Strengths, And Choices

Optimizing ETL Techniques: Approaches For Streamlined Data Integration